Defining a way to help young people think critically when using generative AI (prototyping)

Young people wanted to learn something new, not just be told about AI risks they already knew about

We’re co-creating a way of helping 15 – 17 year olds understand, examine and use generative AI in positive ways. The National Citizen Service (NCS) posed the question:

“How might we support young people to become thoughtful makers and critical users of this technology?”

NCS and the Centre for the Acceleration of Social Technology (CAST) asked dxw family-member Neontribe to carry out the project. We’re collaborating with young people from youth organisations Beats Bus, The Politics Project and Warrington Youth Zone. They’re sharing their expertise with us while also learning about the digital design process.

We began the project in November, starting with a discovery phase which Hannah shared in her blog post. This post will cover how we worked with young people to create a digital tool that can form part of enrichment activities and programs delivered outside the standard education curriculum.

Answering the question

We started to define how we’d answer NCS’s question in an online group workshop to generate ideas with 15 young people. We turned their ideas into 2 early concepts designed to be used in group sessions.

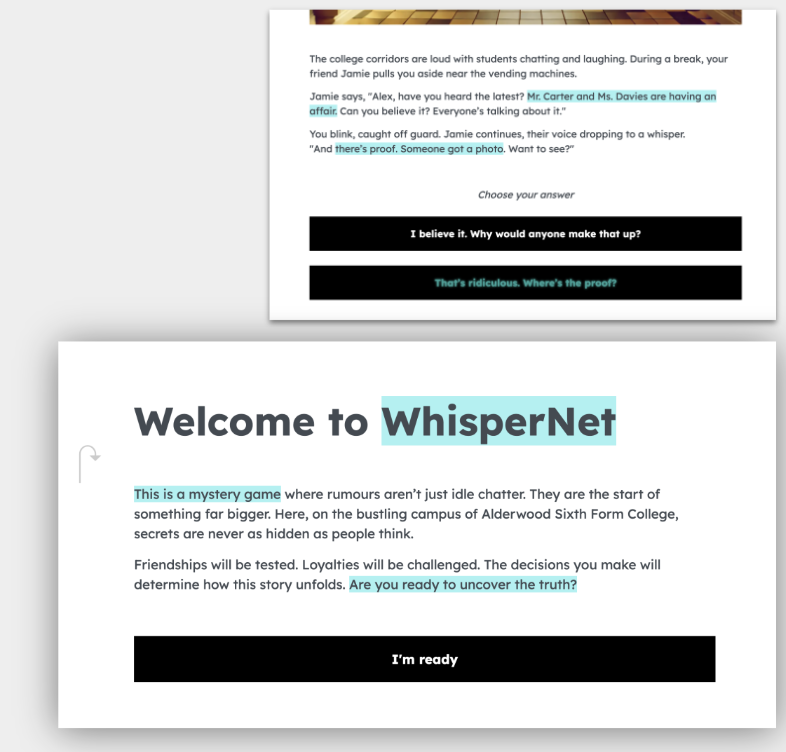

Concept 1: WhisperNet – interacting with a mystery story

In this concept, young people are led through a game where they’re able to select their own path to understanding the moral aspects of using generative AI to create deep fakes. We used a story-game framework called Twine to speedily produce a prototype we could give to our young people to test out.

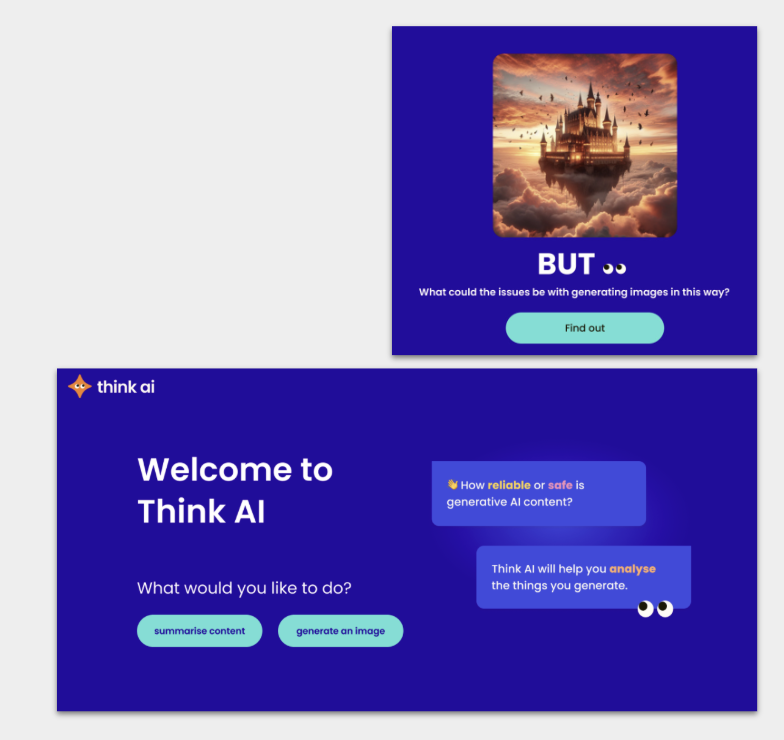

Concept 2: ThinkAI – reflecting on the issues with generative AI’s responses

Our second concept was a tool to support young people in questioning and analysing the responses they get from generative AI. The tool generated an image, or summarised some text in response to a prompt, and wrapped those responses in content that highlights some of the potential issues of what’s produced. We prototyped the tool in a graphics package called Figma to get early feedback.

What we learned

We wanted to hear our young people’s thoughts about both concepts. They felt ThinkAI was more compelling and therefore a better answer to NCS’s question. There were 2 main strands to that.

- they were able to have more control over what they put in and got out

- it was quicker to use, and could be used multiple times

They wanted to learn something new, not just be told about AI risks they already knew about. Even at this early stage, they confirmed we were helping by:

- showing them issues that are discussed less often

- giving them new perspectives to issues they were already familiar with

- guiding critical thinking with reflective questions

- suggesting ways in which the responses given might be biased

We also learned where the prototypes needed more work.

Many young people struggled with reading complex AI-generated text, even with simpler language. We needed to make the text more readable.

They wanted to reflect on their learning and have a choice in what to do next, rather than ending on a negative note focusing on the issues with AI. There were individual preferences on what to do next depending on learning styles and comfort with AI tools. We needed to leave them in a positive mental space.

The “group use” vs “individual use” toggle we’d added caused confusion and the 2 paths were not sufficiently differentiated to be useful. We needed to concentrate on one path and help facilitators in other ways.

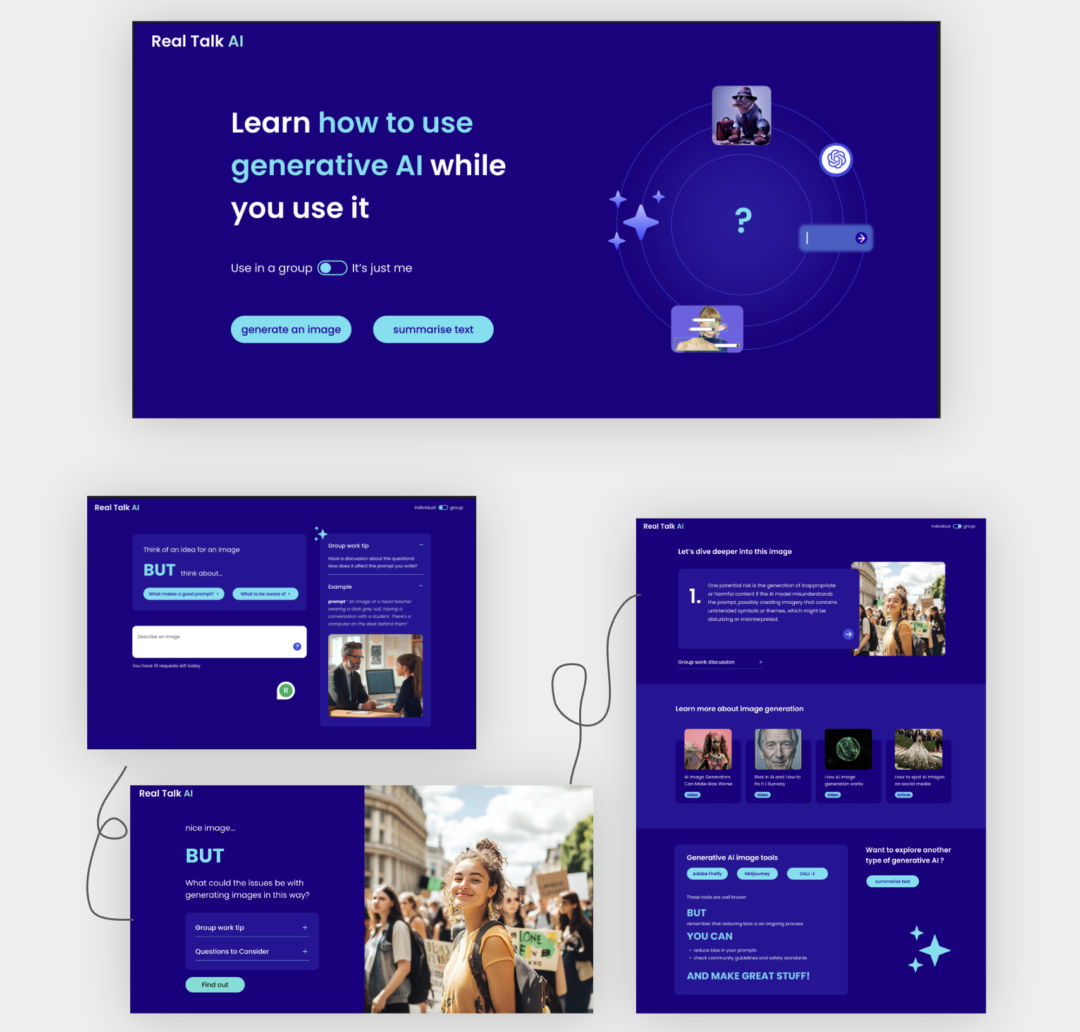

Focussing in on ThinkAI

As a result of the learning we’d gathered, we focussed on ThinkAI as the tool we’d take forward and produced another iteration in Figma. The young people we were working with then tested the tool for usability.

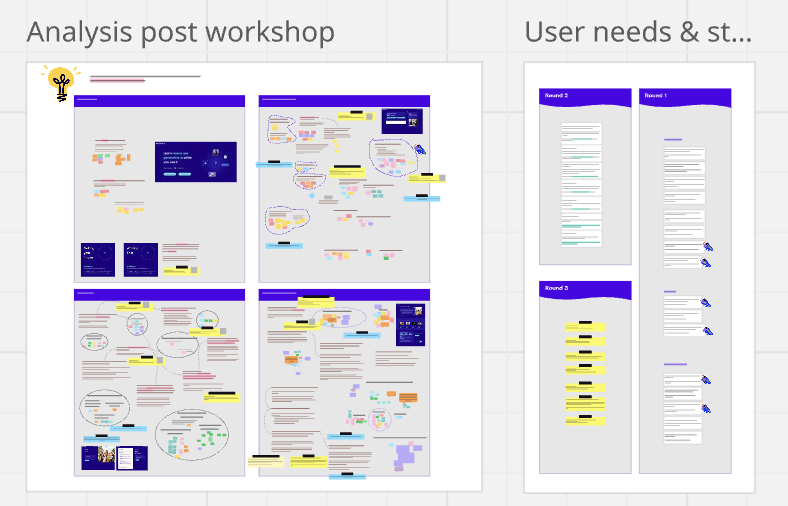

We analysed their feedback in a workshop with our youth service partners. We grouped observations from their testing into themes, and then distilled those themes into user need statements to guide our next design iteration.

Image shows observations from usability testing, grouped into themes, and then user need statements.

What we learned

We’re getting there, but we still need to improve the language, streamline the user journey, and improve our help for facilitators.

What’s next

We’re now deep in the development work for this project. When we’re done, the tool will be live for 6 months for the youth organisations we’ve been working with to use with the young people they support.

Our partner CAST is taking on temporary ownership of the tool, while they and the rest of the team look for an organisation or individual to take this on in the longer term.

In the meantime, we’ll be applying for additional funding support with our fantastic youth organisations so we can:

- continue to host and maintain the tool

- gather feedback and data on how it’s being used and the impact it’s having

- make further technical and design improvements

- market the tool to the youth sector, if people don’t know it exists they won’t use it!

Please get in touch if you can help.